How to Build Trust and Transparency in AI Performance Reviews

TL;DR: AI-powered performance reviews promise greater fairness and efficiency, but 70% of organizations still struggle to earn employee trust in these systems. While 80% of employees believe AI could be more fair than human managers, concerns about bias, transparency, and privacy remain barriers. This guide covers evidence-based strategies to build trust in AI performance management, including explainable AI, human-in-the-loop oversight, regular bias audits, and phased rollout. Learn what to look for in a vendor and find examples of solutions designed to support reliable, unbiased, and trusted performance review workflows.

Imagine this: Your company rolls out a new AI-powered performance review system. Employees are hopeful—after all, over 80% believe AI could be fairer than their human managers. But soon, questions and doubts start to bubble up. Can they trust the system? Will it really be fair?

This is the trust paradox at the heart of AI in performance management. In this post, we’ll break down why trust matters, what can go wrong, and, most importantly, how you can build confidence in your AI-driven review process.

Artificial intelligence is transforming performance management and giving managers new tools to evaluate and develop their teams. While AI promises to reduce bias and improve consistency, building trust in these systems is not automatic. Even though most employees believe AI could be fairer than human managers, 70% of organizations still struggle to build trust, which limits adoption and impact.

With 42% of organizations reporting more streamlined workflows after adopting AI, it’s clear that these technologies are here to stay. That makes it even more important to understand and address trust issues for successful implementation and long-term results.

Why Trust Matters: The Current State

The trust gap in AI-driven performance management is the result of uncertainty, which often leads to fear among potential adopters. Employees are eager for AI to help reduce favoritism and bring more fairness to reviews, but they also worry about losing control, being misunderstood, or even being replaced.

Low trust between employees and performance management processes has tangible consequences, including:

- Falling engagement

- Slowing adoption

- Growing legal/ethical risk exposure

- Increased HR administrative labor

By contrast, organizations that build trust in their AI systems see higher engagement, better adoption, and a stronger sense of fairness. When employees believe the process is transparent and fair, they feel respected and empowered, which leads to better outcomes for everyone.

Understanding Employee Concerns

Organizations must understand how trust is lost in order to retain and build it. Trust is often eroded before employees ever reject a new tool, usually when systems fail to address their core concerns about fairness, privacy, and transparency.

A McKinsey survey in 2024 found that when questioned about AI in performance evaluations, employees circled the same key concerns:

- Cybersecurity risks (51%)

- Inaccuracies/hallucinations (50%)

- Personal privacy (43%)

- Explainability (34%)

- Equity and fairness (30%)

Ultimately, these concerns all point to a lack of trust and a fear that the system will be unfair. For employees, an inaccurate or unexplained AI decision is not just a technical glitch; it can have real consequences for their careers, reputation, and sense of belonging. If an AI system misinterprets their actions or cannot clearly explain its reasoning, employees may feel powerless or even at risk of being treated unjustly. This kind of disconnect can quickly turn employees against the very tools meant to support them.

The Bias Challenge: What Can Go Wrong

How does the perception of bias enter a performance management system? This is just as important as understanding how actual bias can impact AI-powered performance evaluations.

Research from Indiana University’s Kelley School of Business found that underrepresented minorities tend to put more effort into their work when evaluated by AI, while majority group members may put in less. This pattern suggests that employees who have experienced bias in traditional reviews may see AI as a fairer evaluator, leading to increased motivation. Meanwhile, those who may have benefited from human bias could feel less incentivized when those advantages are removed. These findings highlight how trust and perceptions of fairness in AI-driven performance reviews can differ significantly across employee groups.

AI can correct several known human biases, including:

- Recency Bias: The tendency to weigh recent events more heavily (example: An employee receiving a negative performance review for a bad performance last week despite a high-performing year).

- Halo/horns Effect: Treating one positive trait as the theme of the evaluation (halo) or fixating on one flaw (horns).

- Confirmation Bias: When managers unconsciously seek to prove their assumptions, such as by interpreting mistakes as a known pattern of behavior.

- Leniency/strictness Bias: When managers skew performance reviews based on their personality or expectations rather than performance data.

- Similarity Bias: Favoring employees who remind managers of themselves or unfairly critiquing flaws that they share or which are shared by other employees with negative performance critiques.

AI performance management systems lack the emotional context that creates these biases in human managers. However, bias can enter AI-powered systems as well, and organizations must be prepared to confront these new biases introduced through the data, design, and deployment of the algorithms.

Examples of biases unique to AI systems can include:

- AI amplification of historical bias, which may lead the AI to learn patterns of results and automate decisions that reflect them.

- Over-reliance on quantitative metrics, which may distort assessments by undervaluing intangible achievements in favor of quantified KPIs.

- Gaming behaviors, which occur when employees focus on optimizing their actions to achieve higher scores in AI-driven assessments, rather than genuinely improving their performance or contributing to organizational goals.

For example, if the data used to train an AI system reflects past inequalities, the AI may unintentionally reinforce those patterns, even if an employee’s performance is strong. To prevent this, organizations need to use diverse, representative data, conduct regular bias audits, and update their models as needed. Ongoing monitoring and transparency about how bias is addressed are essential for maintaining employee trust and ensuring fair outcomes.

A Note on Legal & Regulatory Requirements

Besides focusing on quality and employee engagement, organizations must also follow legal and regulatory rules when using AI for performance reviews. In the U.S., the Equal Employment Opportunity Commission (EEOC) clarifies the liabilities that employers face regarding discrimination, even when performance evaluations are conducted by machines.

Many regions have similar or even stricter requirements. For example, New York City’s Local Law 144 requires independent bias audits for algorithmic evaluators, while the European Union’s AI Act classifies AI-driven employment practices as “high risk” and sets strict standards for transparency and fairness.

Following these and other local guidelines requires regular audits, transparent practices, frequent bias testing, and employee notification after any changes in methodology. By staying current with evolving regulations, organizations not only reduce legal risk but also demonstrate a commitment to fairness and transparency, which helps build trust with employees.

4 Key Trust-Building Strategies

Organizations deploying AI performance review systems face trust issues at both the employee and managerial levels. To address these challenges, leading organizations use four proven strategies to build trust in AI performance reviews:

Transparency

Transparency establishes trust by reinforcing the purpose of AI, the context of its deployment, and the oversight that will control its influence. For example, many employees wonder if their role will be replaced by AI; transparent communication clarifies that AI is a tool used for the purpose of empowering their workflow, not replacing it.

Additionally, employees wonder who oversees AI and approves its output. Continuous communication reassures them that human managers remain a part of the loop and that an ongoing channel for feedback and employee education will remain open, despite certain aspects of the performance review process becoming automated.

Why It Works

By balancing transparency with effectiveness, employees are more likely to trust their reviewers. What does this mean? Managers must communicate their methods to employees while providing enough discretion to maintain confidentiality and professionalism. They should be open about the AI’s data sources, decision logic, human oversight, and the appeal process available to employees. This can be done through ongoing updates, frequent FAQs, and tailored messaging for different employee groups. Transparency is an ongoing and evolving process, not a one-time checkbox.

Human-in-the-Loop

AI generates better insights when overseen by human moderators. When biases emerge, such as contextual or historical generalizations, human oversight ensures that deserving employees are not passed up for promotions or handed wrongful terminations. Intentional and visible oversight helps to reassure employees that while the data is AI-driven, the fairness of the assessment is being checked by a real person.

Critical decisions, such as promotions, pay changes, or terminations, should always involve human review. Allowing managers to override or question AI-generated results maintains accountability and builds trust in the process.

Why It Works

Keeping a human in the loop gives AI performance reviews strategic checkpoints to follow, including clear documentation at each step. However, placing a human in the loop is not usually enough; employees need transparent reassurance that their assessment remains human-led despite the use of technology to aid the process. This requires effective communication.

Employee Participation

By giving employees partial ownership of the performance review process, managers can reduce their resistance to deployment by identifying the practical limitations of AI performance management early. By participating, employees perceive the process to be more controllable, transparent, and fair, building advocacy and trust among employee groups while dissuading them from thinking of the process as biased.

Approaches to Consider

When encouraging employee participation, managers can begin with pilot testing among certain groups. Advisory committees can introduce employee representatives to give them a voice, as continuous feedback mechanisms allow managers to adjust and evolve. Where possible, unions should be involved to represent employee contributions. At all stages of the performance management flow, employees should be given clear advance notice of changes with explanations, while feeling free to voice their concerns.

Bias Testing & Auditing

Frequent auditing builds trust in AI performance tools and should include regular reviews of local laws. NYC Law, for example, enforces annual independent audits, while other states and federal commissions have their own regulations to follow. Top-performing organizations use every tool at their disposal to make sure compliance is not only achieved in the short term but also regularly monitored and maintained. These should include:

- Monthly performance monitoring checks

- Quarterly stakeholder reviews

- Fairness metrics tracked by demographic groups

- Third-party audit tools

- Internal review boards

Fairness metrics will differ by industry and organization, but can include promotion rates, review scores, appeal and dispute rates, false positive or negative rates, and pay equity rates per demographic.

In addition to these tests, organizations must “red-team” their performance reviews against their employees’ potential to game the system. This includes testing edge cases and potential gaming scenarios that employees may use to coerce the AI to produce certain results. Frequent vulnerability testing and audits are essential to finding these vulnerabilities before employees do.

Implementation Framework

A phased approach to implementing AI in performance management helps organizations build trust and ensure a smooth transition. This approach gives employees and managers time to adapt while introducing key processes, and can be followed on a timeline that is flexible and adaptable to your organization’s specific needs.

Phase 1: Assessment & Planning

The first phase of AI deployment involves assessing the current state of the organization’s review workflow. This should include a clear definition of the objectives and success metrics desired as well as the stakeholders’ key pain points. As the governance framework is built, leadership and employees can be gradually phased into their new workflows. This phase may take anywhere from a few weeks to a couple of months, depending on your organization’s complexity.

Phase 2: Pilot Program

Test the AI review system with a pilot group to gather feedback and identify any issues. This will include training workflows for managers on the features and limitations of their AI-driven review system, bias monitoring, and parallel system tests. If AI workflows need to retain some traditional feedback systems, they should do so; effectiveness is more important than technology adoption for its own sake. The pilot can be as short as a few weeks or extended as needed to ensure confidence in the system.

Phase 3: Iterative Rollout

Expand the AI workflow to additional departments or teams, using lessons learned from the pilot. In this stage, communication is key to clarify the successes and limitations of the previous tests and establishing ongoing monitoring systems. Rollout can be gradual or accelerated, depending on organizational readiness and feedback.

Phase 4: Full Deployment

As company-wide implementation approaches, organizations must adopt an environment of continuous monitoring and improvement. Maintaining ethical and trustworthy AI workflows requires trial-and-error as employees and managers adapt, receive new training and support, and continue communicating their needs.

This phased rollout allows organizations to identify and address issues early, gather feedback, and make improvements before scaling up AI performance reviews across the company.

Vendor Selection Guide

To successfully adopt AI performance management systems, organizations need a vendor that will work with their teams to build trust, prevent bias, maintain security and compliance, and retain human oversight. This includes regular testing, adaptive approval workflows, and change management support tailored to the company’s needs.

When evaluating vendors, organizations should watch for red flags such as a lack of transparency about how outputs are generated (so-called “black box” algorithms), missing documentation on bias reduction, few or no compliance certifications, poor customer reviews, or a lack of human oversight.

To mitigate the risks of these red flags, IT and HR leaders should consider these critical questions when evaluating vendors:

- What is your specific process to reduce AI bias?

- How do you test bias in different demographic groups?

- What data trained your AI models and how representative is it of current organizational frameworks?

- How does your system flag and respond to biased language?

- How does the AI explain performance recommendations?

- Can managers see how data inputs lead to outputs?

- What privacy, security, and industry certifications does your organization have?

- How does human oversight fit in your review process?

- Can managers override your AI’s recommendations?

- What metrics prove that outcomes are improving?

The right vendor should not only answer these questions, but also demonstrate how their platform supports trust and transparency at every step.

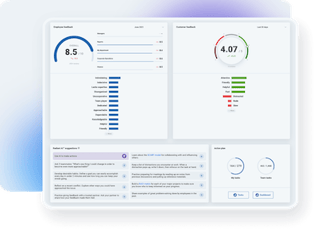

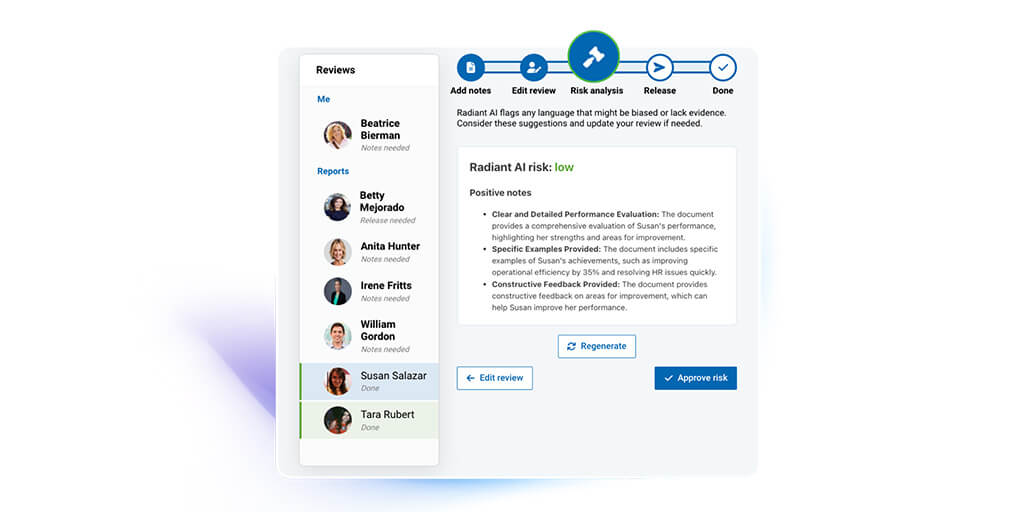

Macorva’s AI-guided performance review workflow is built to address these needs. The platform automatically detects and flags potential bias, explains how each review decision is made, and allows managers to review and override AI-generated recommendations. Macorva integrates with your existing HR systems, provides clear audit trails, and delivers easy-to-understand reports for both employees and leaders. This ensures every step of the review process is transparent, fair, and aligned with your organization’s goals.

By choosing a solution like Macorva, organizations can feel confident that their performance management process is both effective and trusted by employees and leadership alike.

Measuring Success: Trust Metrics

Trust cannot simply be established; organizations must strive to maintain and improve it among all demographics, leadership, and employee groups. To do this, success must be accurately measured, including direct and indirect measures of trust.

Examples of direct trust measures that should be high priorities include:

- Employee trust surveys

- Manager confidence ratings

- System usage rates

- Override frequency

- Appeal/dispute rates

These factors should be tested before and after AI deployment to ensure the process can be strategized to achieve better results. In addition to direct measures, organizations should also consider indirect measures, such as:

- Voluntary attrition rates

- Employee engagement scores

- Performance review completion rates

- Time to complete reviews

- Quality of feedback

Tracking these metrics over time helps organizations identify areas for improvement and demonstrate progress in building trust. Benchmarks for each metric should be set, and results should be clearly communicated to leadership and employees to ensure that building trust in new workflows is an ongoing and continuously improving process.

Frequently Asked Questions about AI Performance Reviews

Whether you should use AI for performance reviews depends on your organization’s goals and needs. AI can improve efficiency, consistency, and help reduce bias, but it also requires oversight, transparency, and regular auditing to ensure fairness.

AI in performance reviews is designed to summarize data and cite references, which helps reduce the risk of bias compared to traditional methods. By relying on structured data and transparent processes, AI tools can provide more objective and consistent insights.

When communicating about AI in performance reviews, it is important to explain that the system organizes information, summarizes data, and helps compile reports, but does not make final performance decisions. Make sure employees understand how the AI works, what data it uses, and that managers or HR are responsible for all final decisions.

AI can be more fair than human managers in some cases, as it can apply consistent criteria and reduce certain human biases. However, the fairness of AI depends on the quality of the data, the design of the algorithms, and the presence of human oversight.

Employees can trust AI performance reviews when organizations are transparent about how AI is used, provide clear explanations for decisions, involve human oversight, and regularly audit the system for bias and accuracy.

Conclusion: The Path Forward

Building trust in AI-powered performance reviews is not a one-time effort, but an ongoing process that requires transparency, human oversight, regular auditing, and employee involvement. Well-designed accountability workflows stem from improved communication and clarity in an environment where transparency is non-negotiable, human oversight is clear, monitoring and auditing are continuous processes, and employee participation is guaranteed. A phased approach helps everyone adapt and keeps the process fair and understandable. With these steps, performance reviews can become more reliable and trusted by everyone involved.

Ready to Build Trust in Your New AI Processes?

HR leaders across industries are adopting new AI-driven processes to write, analyze, and distribute performance reviews. However, overrelying on AI or prompting its deployment without clearly explaining its context and purpose can result in trust issues that erode engagement.

Macorva treats AI as a partnership between technology and people, making sure that human oversight is always part of the process. Contact our team to learn how Macorva can help you build fair, transparent performance reviews that industry leaders trust as their organizations grow and change.